AI, LLMs and future of coding

July 15, 2025

Following up from the previous article, an introduction to AI and LLM, let us see how AI and LLM are changing the landscape of coding.

Summary of AI and LLM

AI is a field which aim to implement human-level intelligent computer programs. Machine learning is a sub-field of AI which aims to train computer programs using data and then use the trained program (called a model) on new problems. LLMs are a Machine learning system which has been trained to learn human languages by training them on text. If you would like to learn more about the basics, see here 👉 A gentle introduction to AI and LLM.

LLMs are built to learn languages. As it happens, computer programs are also written in (programming) languages. Because of the vast freely available open source code, all current LLMs have been trained on code and they have become pretty good at writing code, in almost all popular programming languages.

This article is a summary of what can you do with LLM with regards to coding. It is mainly targeted towards programmers and others working in the technology field. Of course, AI and LLMs are going to impact several other fields, but we are not going to touch on that.

LLMs are built to learn languages. As it happens, computer programs are also written in (programming) languages. Because of the vast freely available open source code, all current LLMs have been trained on code and they have become pretty good at writing code, in almost all popular programming languages.

This article is a summary of what can you do with LLM with regards to coding. It is mainly targeted towards programmers and others working in the technology field. Of course, AI and LLMs are going to impact several other fields, but we are not going to touch on that.

What can be built

Within a software/technology organization, there are mainly two things that can be done with LLMs:

AI driven apps

If you already have an app, or have an idea, to solve a customer problem, it is possible to incorporate LLMs as part of the solution. LLMs can make the solution more efficient, or improve the experience for your users.

Let us say, you are building an app to let your users track their eating habits, and then guide them on tweaks they can do to their diet as per their medical requirements. You would like users to upload pictures of their meals throughout the day so that the app can count the nutrients (protein, carbs, etc.) and make suggestions. In such an app, analysing each picture and calculating the nutrient breakdown of each plate can be outsourced to an LLM agent. While the app will have to keep track of the user's meal history within a local database.

Customer service via a chat interface is another easy to integrate service that can be provided. Fundamentally, LLMs have just one interface - you ask a question in a natural language, augmented with an image, audio or video, and the LLM responds with an answer. So, any problem which fits this pattern can be a candidate for solving with LLMs.

Code more efficiently

Another use of LLMs is in actually coding up whatever app you are building. It could be a typical web application, a workflow automation, or just a Bash script.

Product Managers can use AI to wireframe ideas, build prototypes and run quick demos for stakeholders, before getting the engineering team to implement it fully. Engineering team can also use the LLMs to code up the app quicker (more on this below).

It is also possible to use AI to automate other routine tasks which are part of daily workflow of the team. For example, engineers can use LLMs to get a summary of commit messages to get a sense of what other developers in the team have been doing. It is possible to automatically build reports and presentations. Basically, let AI take over the routine jobs to free up the team's time to focus on more creative stuff.

Let us say, you are building an app to let your users track their eating habits, and then guide them on tweaks they can do to their diet as per their medical requirements. You would like users to upload pictures of their meals throughout the day so that the app can count the nutrients (protein, carbs, etc.) and make suggestions. In such an app, analysing each picture and calculating the nutrient breakdown of each plate can be outsourced to an LLM agent. While the app will have to keep track of the user's meal history within a local database.

Customer service via a chat interface is another easy to integrate service that can be provided. Fundamentally, LLMs have just one interface - you ask a question in a natural language, augmented with an image, audio or video, and the LLM responds with an answer. So, any problem which fits this pattern can be a candidate for solving with LLMs.

Code more efficiently

Another use of LLMs is in actually coding up whatever app you are building. It could be a typical web application, a workflow automation, or just a Bash script.

Product Managers can use AI to wireframe ideas, build prototypes and run quick demos for stakeholders, before getting the engineering team to implement it fully. Engineering team can also use the LLMs to code up the app quicker (more on this below).

It is also possible to use AI to automate other routine tasks which are part of daily workflow of the team. For example, engineers can use LLMs to get a summary of commit messages to get a sense of what other developers in the team have been doing. It is possible to automatically build reports and presentations. Basically, let AI take over the routine jobs to free up the team's time to focus on more creative stuff.

Agentic coding

Over the past several decades, the trend of building Web Applications has been moving towards assembling of pieces together. Most problems that web developers have to solve, now have an off-the-shelf solution. The developer's job is to judiciously select the tools and services and put them together. For example, payment processing, audio & video hosting and processing, streaming etc. each of these have been componentized. Additionally, the rise of frameworks like Django and Ruby on Rails have reduced the boilerplate code the developers have to write for each application. These frameoworks provide generators, which automate the routine tasks like implementing CRUD interfaces and user logins. The LLMs and other AI tools can be seen as another step in that same direction.

Vibe coding is the name given to a style of building an application, where you describe an application idea, using one or more prompts to an AI, so that it produces the full running application. Although, often derided as a meme, this technique does have its uses. This kind of quick prototyping is good for a personal project, or for building quick prototypes. Product managers can use this to code up a prototype instead of writing a spec.

There are AI extensions available in several IDEs which provides smart autocompletes. You can describe a small scale problem in a comment e.g. "write a function which will lookup every name from list of names in the table and return phone numbers of each name if they are present". Once you press tab, the AI agent generates the code, which you review and modify if needed. This kind of AI in the small scale helps save developer time.

Vibe coding is the name given to a style of building an application, where you describe an application idea, using one or more prompts to an AI, so that it produces the full running application. Although, often derided as a meme, this technique does have its uses. This kind of quick prototyping is good for a personal project, or for building quick prototypes. Product managers can use this to code up a prototype instead of writing a spec.

There are AI extensions available in several IDEs which provides smart autocompletes. You can describe a small scale problem in a comment e.g. "write a function which will lookup every name from list of names in the table and return phone numbers of each name if they are present". Once you press tab, the AI agent generates the code, which you review and modify if needed. This kind of AI in the small scale helps save developer time.

Give me a regex that removes any non-alphabetic characters and spaces from a string Write a function to find the largest 5 numbers from an array Write unit tests for Post class Center the profile pic div horizontally within the container div

There are also AI tools for generating documentation, and help you debug. You can also ask questions about the codebase e.g. "show me the function that implements uniquifying the cities entered in shipping addresses", "Is this method thread safe?"

Some Agentic coding tools act like a junior programmer working along side you. You can assign it tasks which it will code up. Review, tweak if necessary and commit. Let us take the earlier example of the diet tracking app. In order to let the AI agent code it up, you will break down the project into tasks, and let AI handle it task by task. Much like how product managers break down a project into epics and stories.

Implement a user login system so that user can login via email and password or phone and OTP User should be able to upload a picture of their plate of meal and get the breakdown of nutrients in it User should be able view the nutrients summary of their meals, grouped by weeks and days and more...

Developer is then freed from lower level implementation details and can focus on the bigger picture of keeping the architecture right.

With AI assisted coding become mainstream, developer's job will now move up to being a product manager and a system designer. AI cannot have a vision. So supplying a vision of the product is one of the most important aspects. Also, knowledge of algorithms, System design, Dev Ops, scaling and problem solving will be necessary to guide the AI agent correctly.

AI agents are very much a work in progress. They are sometimes several minutes wait between a prompt and code generation. Sometime LLMs hallucinate and use an API which does not exist. Hopefully, these shortcomings will be handled in the coming months. Since using AI agents mean more reviews that writing code, review fatigue may set in.

A possible side-effect of AI assissted code generation may be that programming langauge skills will become less relevant and companies will not hire just for languages. It is forcing developers to focus on the skills usually reserved for senior developers.

Understanding the AI Agent

LLM agent APIs are quite simple. They accept a prompt and send a response. Also, current AI agents have no memory (stateless APIs). So, it is fine to modify the AI generated code freely. Since, the agent is going to re-analyze the code anyway next time you ask it to do anything. For the same reason, it is even possible to use two or more AI agents at the same time on the same codebase.

Whenever you ask the agent to work on a task, it reads the code or a document you have provided and proceeds to do the changes. The amount of code that the agent is looking at in order to figure out the changes to be done is called Context. MCP (Model Context Protocol) is a standard interface for connecting AI coding agents to external services reliably and securely. Hence, it is possible to connect tickets, documents, API documentations, calendar, CRM, Slack, or code repositories via MCP. The more the context provided, the more accurate agent generated code can be. It is also possible to provide some examples of the code you want to be generated (called few shots prompting).

In summary, it is best to treat the AI agent as a junior member of the team. It is quick and sure with its responses but not always correct. Since, dev ops is not handled, deployment architecture, scaling, monitoring, alerts, metrics etc. must be handled manually.

For every task, the AI agent works within the context i.e. the amount of visibility it has over the code, looking at which LLM forms its answers. It looks at this context by tokens which can be letters or words. The AI coding agent charges by number of tokens used. So, cost can rack up quickly! In my rough estimates, an agent can cost about a junior to mid-level developer and do the work of about 3-5 developers in the same time.

Whenever you ask the agent to work on a task, it reads the code or a document you have provided and proceeds to do the changes. The amount of code that the agent is looking at in order to figure out the changes to be done is called Context. MCP (Model Context Protocol) is a standard interface for connecting AI coding agents to external services reliably and securely. Hence, it is possible to connect tickets, documents, API documentations, calendar, CRM, Slack, or code repositories via MCP. The more the context provided, the more accurate agent generated code can be. It is also possible to provide some examples of the code you want to be generated (called few shots prompting).

In summary, it is best to treat the AI agent as a junior member of the team. It is quick and sure with its responses but not always correct. Since, dev ops is not handled, deployment architecture, scaling, monitoring, alerts, metrics etc. must be handled manually.

For every task, the AI agent works within the context i.e. the amount of visibility it has over the code, looking at which LLM forms its answers. It looks at this context by tokens which can be letters or words. The AI coding agent charges by number of tokens used. So, cost can rack up quickly! In my rough estimates, an agent can cost about a junior to mid-level developer and do the work of about 3-5 developers in the same time.

Conclusion

Code generation via LLM is a fast evolving field. New tools and capabilities are being launched every day. It will take some time for the field to stabilize and true impact on the industry to become apprent. In the meanwhile, don't expect LLM to solve big problems. e.g. it will not design a Youtube like recommendation algorithm, or debug the mysterious slowdown in your app. However, agentic coding will improve and get new capabilities in the near future.

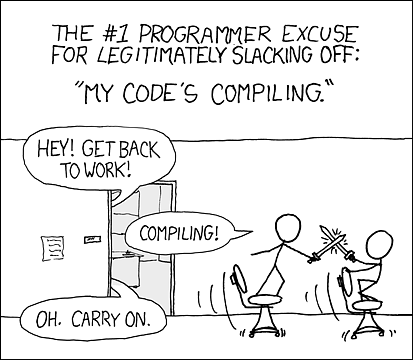

LLMs are not compilers. Hence, we cannot blindly accept their output to be correct. However, we should see AI-friendly programming language and frameworks sometime in near future. Hopefully, they will standardize the adhoc prompt engineering that is necessary now. An Excel-of-coding like tool, that will allow even non-programmers to do basic programming, will be a great breakthrough.

LLMs are not compilers. Hence, we cannot blindly accept their output to be correct. However, we should see AI-friendly programming language and frameworks sometime in near future. Hopefully, they will standardize the adhoc prompt engineering that is necessary now. An Excel-of-coding like tool, that will allow even non-programmers to do basic programming, will be a great breakthrough.

References and further reading

- Vibe coding by a PM

- AI Tools review

- Coding agents have no secret sauce

- Some cool apps which incorporate AI: Coherence, Character, Base64 (document processing)

- Major Agentic coding tools: Cursor, Claude Code, Windsurf, Gemini Code Assist, Devin, Replit, v0, Bolt, n8n, Zapier Agent, Warp, Tempo Labs, Sourcegraph, Trae, Amp, Cline, Augment, Roo, Continue, Fynix, Pythagora, Aider and more.

- Vibe coding apps (point & click programming): Loveable.dev supports no backend, React & Supabase DB based, fully hosted.

- Development tools: Tabnine (code completion), Github copilot, Mintlify (automatic documentation), Deep code (debugging and code review)

- Some advice for coders